Automation using aws lambda when loading Json file from S3 bucket to DynamoDB

Description:

2. Create Role in aws services select Lambda, attach the previous created policy

3. Define Role name as per your understanding click on “create role”

Step 6: Place the file in S3 Bucket check logs in CloudWatch service

Here the

requirement is processing a Json file from S3 Bucket to Dynamo DB. We need an automating process in order to load S3 Bucket information to Dynamo DB. Here we are using lambda

function with python boto3 to achieve it. Whenever any new data is inserted on S3 Bucket, data gets automatically triggered and will be moved to Dynamo DB

Use Case: Assume a scenario in which if there is a new entry for an invoice, the data must be moved to a destination database

Use Case: Assume a scenario in which if there is a new entry for an invoice, the data must be moved to a destination database

Step 1:

1. Sign in to the AWS Management Console and open the Amazon S3 console

2. Choose Create Bucket.The Create bucket Wizard opens

1. Sign in to the AWS Management Console and open the Amazon S3 console

2. Choose Create Bucket.The Create bucket Wizard opens

3. In Region Choose the AWS Region where you want the bucket to reside and Upload Json file

For previewing the data, Click on “select from” and select

the file format

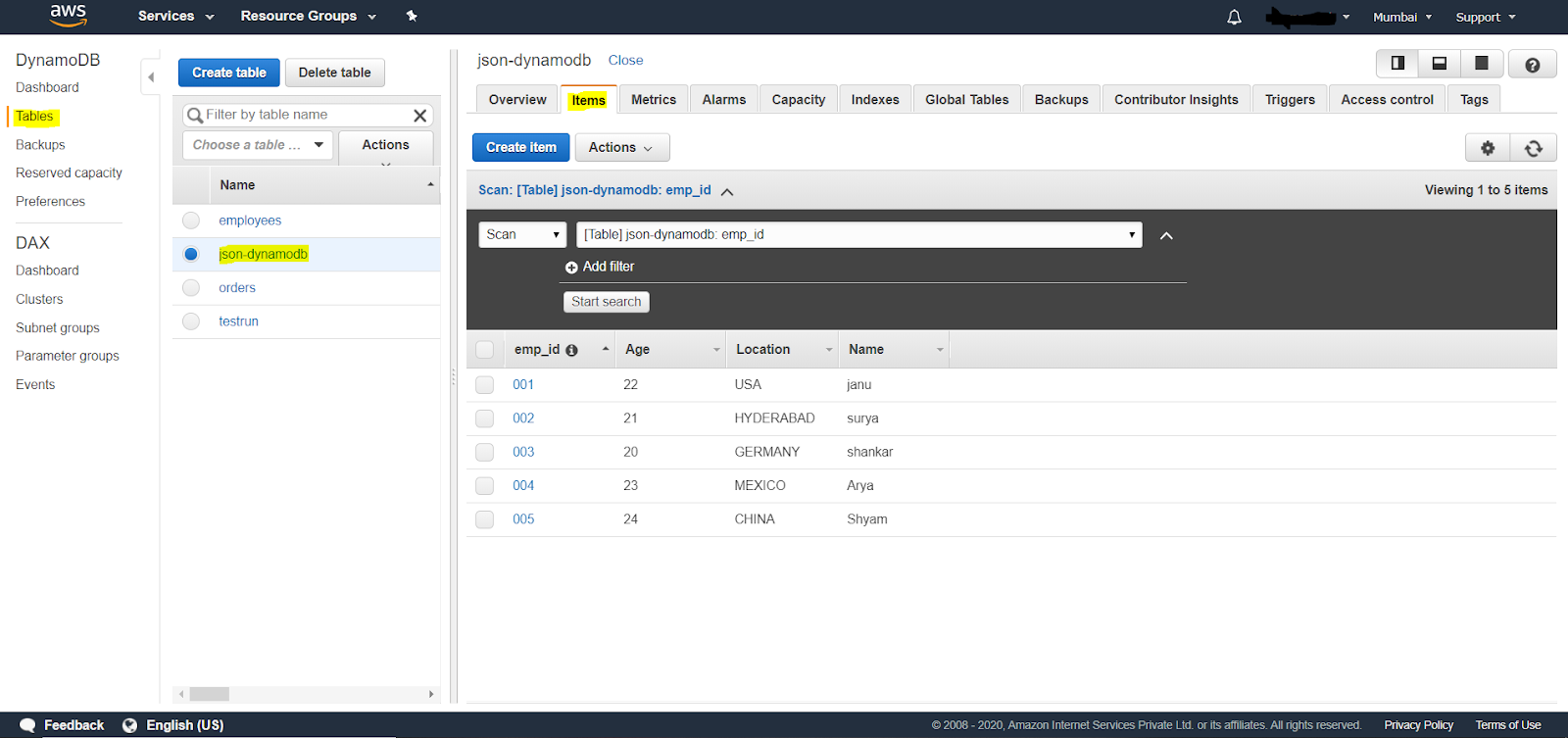

Step 2: Create a table in Dynamo DB, make it primary

key is "emp_id "and remaining data can be anything

Step 3: Create IAM Role and Policy

2. Create Role in aws services select Lambda, attach the previous created policy

3. Define Role name as per your understanding click on “create role”

Step 4: Let’s move

on to AWS Lambda Services and create a Lambda function

Step 5: Create

trigger configuration for S3 Bucket where the Json file uploaded

Lambda function Code console Environment Interface preview:

import json

import boto3

s3_client = boto3.client('s3')

dynamoDB = boto3.resource('dynamoDB')

def lambda_handler(event, context):

bucket =

event['Records'][0]['s3']['bucket']['name']

json_file_name =

event['Records'][0]['s3']['object']['key']

json_object =

s3_client.get_object(Bucket=bucket,Key=json_file_name)

jsonFileReader = json_object['Body'].read()

jsonDict = json.loads(jsonFileReader)

table = dynamoDB.Table(' json-dynamoDB

') /Dynamo DB table name/

table.put_item(Item=jsonDict)

|

Step 6: Place the file in S3 Bucket check logs in CloudWatch service

Nice blog Reddy sreenivas Narappagari

ReplyDeleteThank You @Dinesh kumar Bathina

DeleteGlad to hear! Thank you.

ReplyDelete